In a striking move that underscores growing concerns about the impact of social media on young minds, Kansas has launched a significant legal battle against Snap Inc., the parent company of the popular app Snapchat, alleging that its design and content contribute to a mental health crisis among teenagers. Filed on September 24 by Attorney General Kris Kobach in Washington County, this lawsuit claims that Snapchat prioritizes profits over the safety of its youthful user base, exploiting vulnerabilities through manipulative features and exposing teens to harmful material. The state’s accusations come amid a national conversation about the role of digital platforms in shaping adolescent well-being, with many questioning whether tech companies are doing enough to protect their most vulnerable users. This legal action not only targets specific practices of Snapchat but also serves as a broader critique of how social media apps are engineered to keep users engaged at potentially great personal cost. As Kansas steps into this contentious arena, the case could set a precedent for how states address the intersection of technology and mental health, amplifying calls for accountability in an industry often criticized for placing revenue above responsibility. The outcome of this lawsuit may resonate far beyond state lines, potentially reshaping the digital landscape for millions of young users who navigate these platforms daily.

Unpacking the Allegations Against Snapchat

The core of Kansas’s lawsuit hinges on the assertion that Snapchat’s design is inherently manipulative, crafted to ensnare teenagers in a cycle of compulsive use that undermines their mental health. Attorney General Kobach has pointed to specific features like “Snapstreaks,” which encourage users to maintain daily interactions under social pressure, and “infinite scroll,” a mechanism that removes natural breaks in usage, keeping teens glued to their screens for extended periods. Under the Kansas Consumer Protection Act, the state argues that these elements are not merely engaging but exploitative, designed to maximize user time on the app at the expense of well-being. The lawsuit paints a picture of a platform that capitalizes on the psychological vulnerabilities of adolescents, fostering behaviors akin to addiction and contributing to issues like anxiety and sleep deprivation. This deliberate design strategy, Kansas claims, prioritizes corporate profits over the safety of young users, creating a digital environment that is far more harmful than beneficial for its target demographic.

Beyond the app’s structural features, the lawsuit takes aim at Snapchat’s marketing practices, alleging deception in how the platform presents itself as safe for users as young as 12. Despite app store ratings that suggest only mild or occasional exposure to mature content, Kansas contends that harmful material—ranging from explicit imagery to substance-related posts—is pervasive on the platform. This discrepancy between marketed safety and actual user experience forms a critical pillar of the state’s case, accusing Snap Inc. of misleading parents and teens about the risks involved. The state seeks judicial intervention to halt these practices, demanding fines and court costs while arguing that Snapchat’s failure to deliver on its safety promises violates consumer protection laws. Backed by Cooper and Kirk, a prominent law firm from Washington, D.C., Kansas is mounting a formidable challenge that could force a reevaluation of how social media apps are presented to the public.

Real-World Consequences of Digital Exposure

One of the most poignant aspects of Kansas’s legal action is the tragic story of a Shawnee teenager who lost their life to fentanyl poisoning in 2021 after reportedly connecting with a drug dealer through Snapchat. This heartbreaking incident serves as a stark illustration of the real-world dangers that can arise from the app’s alleged safety shortcomings. The state argues that Snapchat has become a conduit for harmful influences, with drug dealers openly advertising on the platform and placing young users at risk of exposure to illicit substances. This case is not presented as an isolated event but rather as emblematic of a systemic issue, where inadequate content moderation and safety measures fail to shield teens from life-threatening encounters. The emotional weight of such stories amplifies the urgency of the lawsuit, highlighting that the consequences of digital negligence can extend far beyond mental health to physical safety.

Further compounding the issue, Kansas alleges that teens across the state, including in areas like Washington County, regularly encounter content on Snapchat that far exceeds the app’s stated age-appropriate guidelines. From sexual imagery to violent or extremist material, the platform is described as a digital minefield where harmful influences lurk behind every swipe. Such exposure is directly linked to rising rates of anxiety, depression, and other mental health challenges among adolescents, with the state arguing that Snapchat’s environment acts as a catalyst for emotional distress. Unlike the isolated tragedy of the Shawnee teen, this broader pattern of content exposure affects countless users daily, creating a pervasive threat that undermines the well-being of an entire generation. The lawsuit frames Snapchat as a “digital trap,” ensnaring young minds in a web of danger that the app’s creators have failed to adequately address.

Snap Inc.’s Stance Amid Growing Criticism

In the face of Kansas’s allegations, Snap Inc. has maintained that the safety of its users remains a paramount concern, emphasizing the integration of privacy and safety features into Snapchat since its inception. The company acknowledges the dynamic and often unpredictable nature of online threats, noting that no single policy or tool can fully eliminate risks posed by malicious actors who continually adapt their tactics. Snap Inc. highlights its ongoing efforts to refine and update safety strategies, positioning itself as a proactive entity in the fight against digital dangers. This defense suggests a recognition of the challenges inherent in managing a global platform, while asserting that the company is committed to mitigating harm through consistent innovation and vigilance in its approach to user protection.

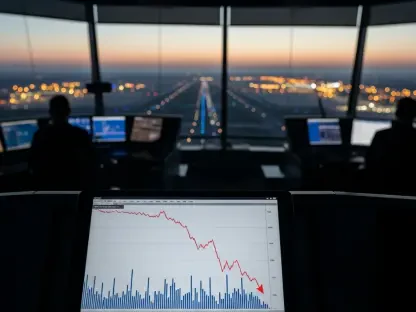

Additionally, Snap Inc. references its own research from 2024, which surveyed over 9,000 individuals across six countries to assess online risks and digital well-being among Gen Z users. The findings revealed a troubling escalation, with 80% of teens and young adults reporting encounters with dangers such as bullying, misinformation, and sexual content. While the company presents this data to demonstrate its dedication to understanding and addressing online risks, it inadvertently lends credence to Kansas’s claims about the prevalence of harmful material on Snapchat. This duality in Snap’s response—acknowledging the scale of digital threats while defending its safety measures—reflects the complex balance tech companies must strike between responsibility and the inherent difficulties of policing vast online spaces. The research underscores the magnitude of the challenge, even as Snap seeks to distance itself from direct blame for the issues raised in the lawsuit.

Societal Implications and the Push for Accountability

The legal action taken by Kansas reflects a broader societal unease about the role of social media in exacerbating adolescent mental health struggles, aligning with a growing consensus among policymakers, educators, and health advocates. Across the nation, there is mounting scrutiny of how app designs, often driven by the pursuit of profit, can intensify conditions like anxiety and depression among young users. Features that promote endless engagement, such as those highlighted in the Snapchat case, are increasingly criticized for prioritizing user retention over emotional and psychological health. This lawsuit adds a significant voice to the chorus calling for tech companies to be held accountable for the digital environments they create, pushing the conversation toward systemic change in how these platforms operate and interact with their youngest audiences.

Should Kansas succeed in its legal battle, the implications could ripple across the country, potentially inspiring other states to pursue similar actions against social media giants. A favorable ruling might lead to industry-wide shifts, prompting companies to rethink app designs and marketing strategies to better protect teen users. It also raises critical questions about the reliability of app store age ratings and the effectiveness of parental control tools, which are often touted as safeguards but may fall short in practice. The case could serve as a catalyst for stricter regulations, compelling platforms to prioritize safety over engagement metrics. As this legal challenge unfolds, it stands as a pivotal moment in the ongoing effort to ensure that the digital world offers a safer space for youth, balancing innovation with the imperative to protect vulnerable populations from the darker corners of technology.

Looking Ahead to Digital Safety Reforms

Reflecting on the trajectory of this legal confrontation, it becomes evident that Kansas’s stand against Snapchat marks a critical juncture in the dialogue surrounding technology and youth mental health, highlighting a pressing need for reform in how social media platforms cater to young users. The allegations of manipulative design and deceptive safety claims, coupled with tragic real-life consequences, underscore this urgency. Snap Inc.’s defense, while highlighting efforts to enhance safety, also reveals the daunting scope of online risks, suggesting that past measures have not fully addressed the depth of the challenge. This case illuminates the intricate interplay between corporate responsibility and the evolving nature of digital threats, setting the stage for broader scrutiny of the industry.

Moving forward, the focus must shift toward actionable solutions that bridge the gap between technological advancement and user protection. Policymakers could consider mandating transparent design practices, ensuring that features like infinite scroll are balanced with mechanisms to encourage healthy usage limits. Tech companies might be encouraged to invest in more robust content moderation systems, curbing exposure to harmful material before it reaches vulnerable eyes. Additionally, collaboration between states, federal agencies, and industry leaders could foster standardized safety benchmarks, creating a unified front against digital dangers. As the impact of Kansas’s lawsuit continues to unfold, it serves as a reminder that safeguarding the next generation in an increasingly connected world demands innovation not just in technology, but in the policies and priorities that shape its use.