A political savant and leader in policy and legislation, Donald Gainsborough is at the helm of Government Curated. His work focuses on a critical, emerging challenge for public administration: transforming decades-old knowledge management practices into a strategic capability powered by artificial intelligence. With AI moving from a theoretical concept to a daily tool, Gainsborough’s insights are essential for government leaders navigating the shift from static information repositories to dynamic, actionable intelligence that can redefine public service. We explore how to govern this new power, build trust in automated systems, and prepare agencies for a future where knowledge is not just found but actively participates in the work itself.

Historically, knowledge management involved employees searching through portals and wikis. Now, AI assistants bring knowledge directly into workflows. What are the biggest operational shifts government agencies must navigate as knowledge becomes a proactive ‘presence’ rather than a static repository? Please share some examples.

The fundamental shift is moving from a “pull” to a “push” model, and the cultural whiplash can be significant. For years, we built these vast digital libraries—wikis, shared drives, portals—and pleaded with our staff to go use them. It felt like a noble but often thankless mission. The reality was that an employee facing a deadline would rather ask the person in the next cubicle than spend twenty minutes digging for a document. Now, AI flips that entirely. Knowledge is no longer a place you have to visit; it’s a presence that travels with you inside your email, your chat, your case management system. When you’re drafting a response, an AI assistant can surface the standard language instantly. Instead of searching for “our policy on X,” you just ask the system, and it provides an answer, not a link. This raises the stakes immensely. A link to a document implies the user still has to do the work of interpretation, but a confident, polished answer carries an authority that can be dangerous if the underlying knowledge is flawed.

AI can act as a ‘bright flashlight in the attic,’ highlighting messy or outdated organizational knowledge. What is the first step a leader should take to avoid creating a ‘confident misinformation machine,’ and how do you recommend they prioritize which knowledge areas to clean up first?

The first step is a humbling one: leaders must admit the flashlight will find dust, cobwebs, and things they’ve forgotten about. You can’t just plug in an AI and expect it to magically organize decades of conflicting policies and outdated documents. It will simply blend them into a coherent-sounding but incorrect answer. So, the first move is strategic triage. Don’t try to boil the ocean by cleaning up everything at once. Instead, identify the high-value, high-frequency knowledge areas that are critical to your mission. Start by asking, “What are the questions our people ask constantly?” The answers usually point to things like onboarding procedures, common HR and IT help questions, procurement steps, and critical customer service responses. Focus your energy on those areas first. Make them undeniably reliable with one authoritative version, clearly named owners, and review dates. That initial cleanup creates a core of trustworthy knowledge that builds momentum and confidence in the system.

A hub-and-spoke governance model is often effective, involving an executive sponsor, a program lead, a council, and department stewards. Could you walk us through how this model functions in practice and why giving departments ownership over their own authoritative content is so critical for success?

This model works because it balances central oversight with distributed responsibility, which is crucial in sprawling government agencies. At the center—the hub—you have the executive sponsor, a senior leader like a Deputy or COO, who provides the political will, removes barriers, and constantly ties the initiative back to the agency’s core priorities. They make it clear this isn’t just an IT project. Working with them is the knowledge management program lead, the day-to-day driver who manages standards, training, and the AI-readiness of the content. A governance council, composed of leaders from CIO, HR, Legal, and key departments, acts as the “glue,” ensuring enterprise-wide coordination. But the real magic happens in the spokes. Each department must have its own knowledge stewards who are responsible for their specific content. This is non-negotiable. If knowledge management is seen as “someone else’s job,” it will fail. The HR department knows its policies best; the procurement team knows its processes. Giving them ownership ensures the information is accurate, up-to-date, and relevant to the people actually doing the work. The central team provides the framework, but the expertise lives in the departments.

Beyond technical skills, what specific leadership qualities define the ideal person to spearhead an AI-enabled knowledge management program? Please elaborate on how they would translate an agency’s mission into concrete knowledge needs and successfully drive adoption across different departmental silos.

The ideal leader for this is more of a translator and a diplomat than a technologist. First, they must be able to translate the agency’s abstract mission into tangible knowledge requirements. If the mission is to improve frontline service, this leader asks, “What do our service agents need to know, right now, to resolve the top five citizen inquiries consistently and accurately?” They connect high-level strategy to the information needs of someone on the phone with a constituent. Second, they have to be a skilled silo-buster who can drive adoption. This requires credibility with both senior leadership and frontline staff. They need to walk into a room with department heads and negotiate ownership, making it clear how this program helps them achieve their own goals rather than just adding another task to their plate. Finally, they must operate with immense discipline. This isn’t a “launch it and leave it” project. It’s about establishing relentless routines: clear content owners, mandatory review cycles, and an unwavering commitment to maintaining a “single source of truth.” Without that operational rigor, even the most brilliant strategy will crumble.

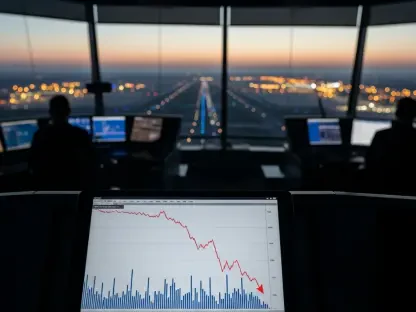

The transition from AI as a reference tool to an engine that takes direct action is a significant turning point. What new governance challenges does this create, and what specific audit trails or human review processes are essential for ensuring accountability when AI starts executing operational tasks?

This is the moment we absolutely must slow down to get it right. When an AI moves from answering a question like “What are the steps to file a request?” to actually filing that request on a user’s behalf, knowledge management merges with operations. The governance challenges explode. Suddenly, we’re not just worried about a wrong answer; we’re worried about an unauthorized action. You need a new layer of accountability built directly into the system. This starts with crystal-clear audit trails. We must be able to see exactly what information the AI used to make its decision, what action it took, when it took it, and who initiated the process. For high-stakes actions—anything involving finances, compliance, or citizen-facing decisions—an ironclad human-in-the-loop review process is essential. The AI might draft the order or populate the form, but a person must give the final approval. We have to maintain absolute human accountability for what the organization does, even if an AI helps execute the task.

Trust is the centerpiece of modern knowledge management. What are the most effective strategies for building and maintaining user trust in an AI assistant? Please describe a practical feedback loop system that allows users to report errors and helps content owners continuously improve the AI’s accuracy.

You can have the most sophisticated AI in the world, but if your staff doesn’t trust it, they won’t use it. People will forgive a clunky search bar, but they will not forgive an AI that confidently gives them the wrong answer, especially if it leads to an embarrassing mistake. The most effective strategy for building trust is radical transparency and a visible commitment to improvement. This starts with always citing the source. When the AI provides an answer, it must show exactly which policy document or internal guide it came from. The second, and perhaps most critical, element is a simple, frictionless feedback loop. Right next to every answer the AI gives, there should be a simple “Was this helpful?” button with a thumbs-up or thumbs-down. If a user clicks thumbs-down, it should immediately open a small window allowing them to explain what was wrong. That feedback must be automatically routed not to a general help desk, but directly to the designated owner of that specific piece of content. This transforms knowledge management from a one-way publishing event into a continuous, living conversation where the users and the content owners are partners in making the system smarter and more reliable every day.

What is your forecast for AI-enabled knowledge management in government over the next five years?

Over the next five years, I believe we will see knowledge management evolve from a support function into a core strategic capability that is inseparable from an agency’s performance. The distinction between “knowing” and “doing” will blur significantly as AI assistants become more integrated into operational workflows, not just providing answers but actively helping execute tasks. This will force a much-needed reckoning with data and content governance, as agencies realize that AI is only as good as the information it’s built upon. We’ll see the rise of dedicated knowledge management leaders who are not just librarians but strategists, deeply involved in AI governance and process improvement. Ultimately, the biggest change will be cultural: the burden of knowledge will shift from falling on a few overtaxed experts to being a shared, system-supported responsibility, freeing up experienced staff to focus on the complex, mission-critical challenges that no AI can solve alone.