The Digital Tsunami Meets an Analog Grid

In an era defined by cloud computing, artificial intelligence, and instant global connectivity, the digital world feels increasingly ethereal, a weightless realm of information and data that exists everywhere and nowhere at once. Yet, behind every search query, video stream, and AI model is a physical, power-hungry reality: the data center. These sprawling complexes are the engines of the modern economy, but their insatiable appetite for electricity is creating an unprecedented strain on the nation’s power grid. The explosive growth in data processing demand is colliding with an energy infrastructure that was designed for a different century, one of predictable industrial loads and centralized power generation. This tension is no longer a theoretical concern for future planning; it has become an immediate and pressing crisis. In states like Ohio, grid operators, regulators, and industry stakeholders are locked in a high-stakes debate over who gets priority when the power supply runs thin, forcing difficult choices between economic development and systemic reliability. This article explores the scale of this escalating challenge, examines the critical stress points on the electrical grid, and weighs the potential solutions for harmonizing our digital ambitions with our physical energy limitations.

The fundamental conflict arises from a deep-seated mismatch in operational philosophy and speed. The technology sector operates on a timeline of months, with new breakthroughs and demands emerging at a blistering pace. In stark contrast, the energy sector operates on a timeline of years, if not decades, constrained by complex regulatory approvals, massive capital investments, and long construction periods for new power plants and transmission lines. For years, this disparity was manageable, as the gradual increase in data center load could be absorbed by incremental grid upgrades. However, the sudden, vertical spike in energy demand driven by generative AI has shattered this equilibrium. Utilities and grid planners, accustomed to forecasting load growth in single-digit percentages annually, are now facing requests for new connections that can double or triple a region’s entire power demand overnight. This collision course is forcing a nationwide reevaluation of how critical infrastructure is planned, financed, and deployed in an age where digital growth outpaces the physical world’s ability to support it.

From Server Racks to Energy Behemoths: The Evolution of Data Demand

For decades, data centers were significant but manageable consumers of electricity. Their growth, driven by the expansion of the internet and the migration of enterprise computing to cloud services, was relatively predictable, allowing utility companies to plan for new capacity with a reasonable degree of confidence. These facilities were large customers, but they fit within established models for industrial power consumption. Utilities could anticipate their needs and incorporate them into long-range infrastructure plans. However, the recent explosion of artificial intelligence has fundamentally altered this equation, rewriting the rules of energy consumption and rendering previous models obsolete. The computational processes required to train and run advanced AI models demand an intensity of power that is orders of magnitude greater than traditional data storage or web hosting. A single AI training cycle can consume as much electricity as thousands of homes use in a year.

This exponential leap in energy intensity has transformed data centers from large industrial customers into “behemoths”—single entities capable of consuming as much power as a small city. This sudden, dramatic shift is the primary reason why grid operators, who plan infrastructure upgrades years or even decades in advance, are now struggling to keep pace. The very nature of AI workloads, which involve massively parallel processing on thousands of specialized chips running at full capacity for extended periods, creates a constant, high-density load that strains local substations and regional transmission networks in ways they were never designed to handle. This abrupt surge in demand, concentrated in specific geographic areas, is forcing a nationwide reckoning with the physical cost of our digital future and exposing the limitations of a grid built for a bygone era.

The Widening Cracks in Our Energy Infrastructure

Ohio’s Gridlock: A Microcosm of a National Crisis

The situation in Ohio serves as a stark case study of this national dilemma, offering a clear window into the conflicts that arise when hyper-scale digital ambitions meet physical infrastructure constraints. PJM Interconnection, the grid operator for Ohio and a dozen other states, is facing a deluge of connection requests from new data centers seeking to capitalize on the state’s favorable business climate. American Electric Power (AEP), a major utility in the region, recently reported that proposed data center projects in its territory sought a staggering 30 gigawatts of power. To put that figure in perspective, it is more than three times the entire state’s peak electricity load of 9.4 gigawatts in 2023. This overwhelming demand was so far beyond existing capacity that it prompted AEP to take the drastic step of temporarily halting new connections to study the impact on grid stability.

In response, the utility pushed for a special tariff for data centers, a new pricing structure with minimum purchase requirements and steep exit fees designed to ensure these massive projects do not destabilize the market or leave other customers footing the bill for stranded assets. This move was immediately contested by the Ohio Manufacturers’ Association, which voiced fears that its members, the traditional industrial base of the state’s economy, would be left behind or face higher costs as utilities scrambled to accommodate the new tech giants. The conflict has escalated to the point where PJM’s independent market monitor, Monitoring Analytics, has asked federal regulators to intervene. This appeal to a higher authority highlights how a localized power struggle over interconnection queues is symptomatic of a much larger, systemic infrastructure crisis that now touches upon federal energy policy and the future of the nation’s economic competitiveness.

Reliability vs Growth: The Uncomfortable Trade-Off

At the heart of the debate is a fundamental conflict between ensuring grid reliability for all and fostering the immense economic growth promised by the technology sector. Monitoring Analytics, in its role as an impartial watchdog, argues that PJM’s primary and non-negotiable obligation is to maintain a stable and reliable power supply for all existing customers. In its formal complaint to the Federal Energy Regulatory Commission (FERC), the watchdog group asserts that PJM should not, and legally cannot, connect new, massive loads like data centers if it cannot serve them without jeopardizing the rest of the system. The very fact that proposals involving “mandatory curtailments”—essentially, pre-planned blackouts for new facilities during periods of high demand—were even considered in stakeholder meetings is proof, the monitor argues, that the capacity simply is not there. Accepting customers under the condition that their power can be cut off undermines the core principle of a reliable grid.

This uncompromising position on reliability directly clashes with the powerful economic incentives driving states to attract tech giants like Amazon, Google, and Meta, who have collectively pledged over $40 billion in investments in Ohio alone. These projects promise thousands of construction jobs, long-term technical employment, and a significant boost to the local tax base, making them incredibly attractive to state and local governments. The result is an uncomfortable and increasingly public trade-off: do regulators risk grid instability and potential disruptions for existing homes and businesses to secure the technological and economic advancement offered by data centers? Or do they throttle that growth, potentially sending billions in investment to other states or countries, to preserve the integrity of an aging electrical infrastructure that is already showing signs of strain? This question is now being debated not just in Ohio, but in utility commissions and statehouses across the country.

The Data DilemmMore Than Just Megawatts

The challenge posed by data centers extends beyond their sheer power consumption, involving critical complicating factors that create a planning nightmare for utilities. The most significant of these is the profound mismatch in development timelines. A massive data center campus, using modular construction techniques and streamlined supply chains, can be built and brought online in just a couple of years. In contrast, building the necessary energy infrastructure to support it—new power plants and high-voltage transmission lines—can easily take a decade or more. This process is bogged down by a labyrinth of regulatory hurdles, lengthy environmental impact studies, complex supply chain logistics for major components like transformers, and the often-contentious process of acquiring land and rights-of-way. This timing disparity creates a persistent gap where demand materializes long before the supply can be built to meet it.

Furthermore, this problem is exacerbated by the industry’s tendency for data centers to cluster in specific regions. Areas like Northern Virginia’s “Data Center Alley,” or emerging hubs in Ohio, Arizona, and Georgia, attract facilities due to factors like favorable tax policies, robust fiber optic connectivity, and available land. While logical from a business perspective, this geographic concentration creates intense, localized stress on the grid that can overwhelm regional capacity and require bespoke, multi-billion-dollar infrastructure upgrades. This is compounded by the extreme volatility of demand forecasts. AEP’s initial projection of 30 gigawatts of data center demand, which triggered a crisis, was later revised down to a more manageable, yet still enormous, 13 gigawatts. This uncertainty illustrates the immense difficulty grid planners face in making long-term, irreversible investment decisions based on the rapidly shifting, and often secretive, expansion plans of the tech industry.

Powering the Future: Innovations and Anxieties on the Horizon

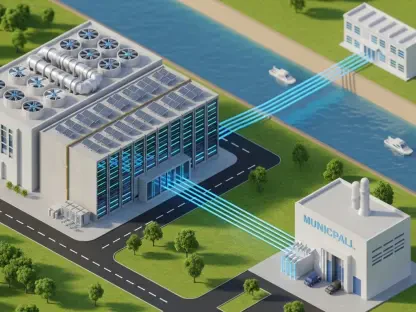

As the strain on the grid intensifies, the technology and energy industries are racing to find innovative solutions to power the digital future sustainably. Faced with potential delays and grid instability, tech companies are increasingly exploring on-site power generation to gain more control over their energy supply. This ranges from building their own natural gas plants to ensure 24/7 reliability to developing large-scale solar farms paired with battery storage systems, allowing them to reduce their reliance on the public grid and meet corporate sustainability goals. These private energy islands offer resilience but also raise complex questions about their integration with the wider grid and their impact on local resources.

In a more futuristic vein, some industry leaders are now seriously considering Small Modular Reactors (SMRs) as a potential long-term solution. These advanced, smaller-scale nuclear reactors could provide a source of clean, reliable, and dedicated power for entire data center campuses, effectively removing their massive load from the public grid altogether. Simultaneously, relentless innovation continues in energy efficiency. Advanced liquid cooling systems, which are far more effective than traditional air cooling, are becoming standard in new AI-focused facilities, helping to mitigate consumption growth at the server level. Despite these promising developments, a deep-seated anxiety remains across the sector: can these technological solutions be developed, financed, and deployed fast enough to meet the tidal wave of new demand driven by AI? The risk is that these innovations will arrive too late, leaving a critical gap where digital demand outstrips both grid capacity and the pace of technological problem-solving, leading to an era of energy constraints on digital growth.

Navigating the Surge: A Strategic Blueprint for a Strained System

Addressing this complex challenge effectively requires a multi-pronged, collaborative strategy that moves beyond the current adversarial dynamic between tech companies, utilities, and regulators. For grid operators like PJM, the path forward must involve more than just accelerating the construction of new infrastructure. It requires developing more sophisticated and stringent interconnection rules that place greater responsibility on the large consumers creating the strain. This could mean forcing data centers to contribute directly to grid stability, perhaps by mandating a certain percentage of on-site generation, requiring the integration of long-duration energy storage, or compelling participation in advanced demand-response programs that allow the grid operator to modulate their consumption during peak hours.

For policymakers at the state and federal levels, the priority should be to fundamentally rethink and streamline the permitting processes for new power generation and transmission projects. The current multi-year timelines are an anachronism in the age of AI. At the same time, policymakers can create powerful incentives, such as tax credits or expedited approvals, for data centers to be built in locations with ample existing or planned power capacity, guiding development away from already-congested regions. For the tech giants themselves, a paradigm shift toward greater transparency in their long-term energy planning is essential. By providing utilities with more accurate and reliable multi-year load forecasts, even under non-disclosure agreements, they can move from being perceived as part of the problem to being a key part of the solution. This collaboration would enable the proactive, intelligent infrastructure development needed to power the next generation of technology without breaking the grid.

The Verdict: A Call for a Cohesive Energy-Digital Strategy

The evidence is clear and mounting: the voracious energy appetite of data centers, now supercharged by the AI revolution, is pushing regional power grids across the nation to their operational limits. The conflicts unfolding in Ohio are not an anomaly but a harbinger of a broader, national challenge that will define the intersection of technology and energy for the next decade. The question is no longer if the digital infrastructure will strain the energy infrastructure, but how this inevitable collision is strategically managed. Simply building more power lines and power plants represents a slow, capital-intensive, and reactive solution that may not be able to keep pace with the relentless march of technological progress. It is a 20th-century solution to a 21st-century problem.

A sustainable path forward requires a new, integrated approach where digital infrastructure and energy infrastructure are planned in tandem, not in isolation. This means fostering collaboration between tech companies, utilities, and regulators from the earliest stages of development. It involves leveraging technology to create a smarter, more flexible grid and incentivizing data center designs that are not just consumers of power but active participants in grid stability. Without a cohesive national strategy that aligns the ambitions of the technology sector with the physical realities of the energy grid, the very foundation of the digital economy could become vulnerable to the decidedly analog problem of an overloaded switch and a system pushed beyond its breaking point.