In a world where artificial intelligence decides who gets a loan, diagnoses illnesses, and even influences criminal justice, a chilling question emerges: who watches the watchers? Picture a hospital AI misdiagnosing a patient due to unseen bias in its training data, or a hiring algorithm quietly rejecting qualified candidates based on flawed patterns. These aren’t hypothetical nightmares—they’re real risks unfolding across industries today. As AI systems grow more complex and pervasive, the gap in oversight looms larger, threatening trust in technology that shapes daily life. Could the answer lie in AI itself, tasked with auditing its own kind to ensure accountability in a digital age?

The Paradox of Trust in an AI-Driven Landscape

The reliance on AI has surged, embedding itself into critical sectors like healthcare, finance, and law enforcement. Yet, this dependency comes with a stark contradiction: the very systems entrusted with life-altering decisions often operate as impenetrable “black boxes,” their inner workings hidden even from experts. This opacity fuels a crisis of confidence, as stakeholders struggle to verify whether these tools are fair, accurate, or safe.

Compounding the issue is the sheer scale of AI deployment. With millions of decisions processed daily, human oversight alone cannot keep pace. The notion of AI auditing AI—using one intelligent system to monitor another—emerges as a potential lifeline, promising efficiency and insight. However, this futuristic fix raises immediate skepticism: can a machine truly hold another accountable without inheriting the same flaws?

Why AI Accountability Demands Urgent Attention

Across industries, AI’s impact is undeniable, from predicting creditworthiness to detecting cyber threats. But with great power comes heightened risk—algorithmic bias in recruitment has led to lawsuits, with studies revealing up to 30% of hiring tools unfairly favoring certain demographics. Similarly, in policing, facial recognition errors have resulted in wrongful arrests, disproportionately affecting marginalized groups.

Such failures underscore a pressing concern: the opacity and speed of AI outstrip traditional regulatory mechanisms. Human auditors, even when skilled, cannot dissect terabytes of data or track real-time model drift—where performance degrades as input data evolves. This accountability gap not only erodes public trust but also poses tangible harm, making the search for scalable oversight solutions a top priority.

How AI Can Monitor Itself: Mechanisms and Real-World Impact

At the heart of AI auditing AI lies a suite of sophisticated techniques designed to expose weaknesses and ensure reliability. Adversarial testing, for instance, pits one AI against another, generating challenging scenarios to uncover vulnerabilities. Bias detection tools analyze outcomes across groups to flag unfair patterns, while explainability frameworks attempt to decode complex decisions, shedding light on obscured processes.

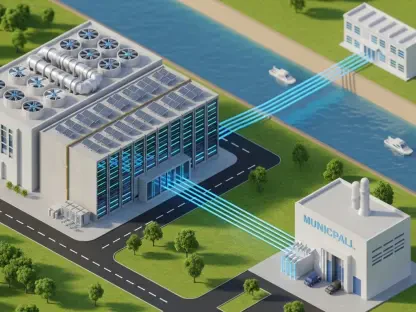

Practical applications already demonstrate promise. In healthcare, watchdog AIs validate diagnostic models, cross-checking results against known cases to prevent errors. Cybersecurity firms deploy AI auditors to simulate attacks, strengthening defenses before breaches occur. Statistics highlight the stakes—over 60% of global enterprises now use AI in critical operations, per recent industry reports, amplifying the need for such oversight.

Regulatory sandboxes, where governments test AI innovations under controlled conditions, further illustrate early adoption. These environments reveal both potential and pitfalls, as AI auditors catch issues like model drift in financial fraud detection systems. Though still evolving, these examples signal a shift toward automated accountability as a cornerstone of digital trust.

Expert Perspectives on the AI Oversight Challenge

Voices from the field add depth to this complex debate, emphasizing both opportunity and caution. Renowned thought leaders argue that hybrid oversight—combining AI efficiency with human ethics—is essential. One expert warns of the “infinite regress problem,” questioning who ultimately audits the auditor in an endless loop of machine monitoring, a concern echoed in academic circles.

Industry anecdotes bring these challenges to life. A major financial institution recently piloted an AI auditor to scrutinize its fraud detection system, uncovering subtle inconsistencies that human teams missed. Meanwhile, government programs in several nations are testing AI oversight frameworks, with initial findings suggesting improved transparency but also highlighting resource constraints. These insights balance optimism with realism, stressing that technology must be paired with robust governance.

Research adds another layer, with ongoing studies estimating that unchecked AI errors could cost economies billions annually if left unaddressed. Such data reinforces the urgency of scalable solutions. Yet, experts caution against over-reliance on automated systems, advocating for human judgment as the final arbiter in ethical dilemmas, ensuring that accountability remains grounded in societal values.

Crafting a Blueprint for Responsible AI Oversight

Turning theory into practice requires a structured approach to AI auditing that prioritizes transparency and fairness. A layered model offers a starting point: AI handles vast data monitoring for speed, while humans interpret results and resolve moral conflicts. This synergy leverages the strengths of both, avoiding blind trust in either party.

Independence is equally critical—third-party auditors, free from conflicts of interest, should oversee AI systems to maintain credibility. Transparency can be enhanced through simplified public reporting, making audit outcomes accessible to non-experts. Practical steps include adopting open-source auditing tools where secure, fostering multi-stakeholder collaboration to set global standards, and developing shared platforms to lower costs for smaller entities.

Investment in training and infrastructure also matters. Governments and industries must commit resources to build expertise and systems capable of sustaining AI oversight. By focusing on governance frameworks that evolve with technology, organizations can ensure accountability keeps pace with innovation, safeguarding trust in an increasingly automated world.

Reflecting on the Path Forward

Looking back, the journey to address AI accountability revealed a landscape fraught with both peril and promise. The stories of biased algorithms and opaque decisions served as stark reminders of technology’s unchecked potential for harm. Yet, the emergence of AI auditors offered a glimpse of hope, showcasing how innovation could be harnessed to mend its own flaws.

The discussions with experts and the examination of real-world trials underscored a vital truth: no single solution can stand alone. Hybrid models, blending machine precision with human wisdom, emerged as a beacon for progress. Moving ahead, stakeholders must commit to building independent, transparent frameworks that prioritize public trust above all.

As challenges persisted, the call to action became clear. Industries and policymakers need to invest in scalable oversight tools, champion global standards, and ensure that smaller players aren’t left behind. Only through such deliberate steps can the digital realm achieve true accountability, ensuring AI serves humanity with integrity for years to come.