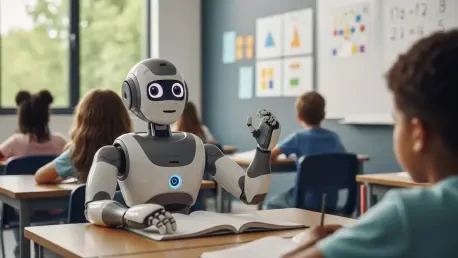

What happens when the digital tools designed to support teachers in creating equitable classrooms inadvertently deepen racial disparities? In 2025, as artificial intelligence (AI) becomes a staple in education, a troubling pattern has emerged, revealing that AI teacher assistants, used by countless educators to draft behavior plans, often suggest harsher discipline for students with Black-coded names while offering leniency to those with White-coded names. This unsettling disparity raises a critical question about whether technology, heralded as a neutral force, might be perpetuating bias in schools. The implications are profound, touching on fairness, trust, and the future of education itself.

The Hidden Bias in Digital Classrooms

In an era where technology shapes nearly every aspect of learning, AI tools have become indispensable for many teachers. Platforms like MagicSchool and Google Gemini are relied upon weekly by roughly a third of educators to streamline tasks such as lesson planning and behavior management. Yet, beneath the surface of these time-saving innovations lies a disturbing issue: the potential for racial bias in their outputs. A comprehensive study by a leading nonprofit has exposed how these tools can reflect and even amplify societal inequities, particularly in how they address student behavior.

This disparity is not just a technical glitch; it strikes at the heart of educational equity. When AI suggests punitive measures for some students and supportive strategies for others based solely on the perceived racial coding of their names, it risks reinforcing harmful stereotypes. The technology, meant to assist overworked educators, could instead become a silent contributor to systemic challenges that have long plagued schools. This revelation demands a closer look at how such tools are developed and deployed.

Why Bias in AI Education Tools Matters

The stakes of AI bias in education are extraordinarily high. Black students already face disproportionate suspension rates—often for subjective behaviors—compared to their White peers, a trend well-documented by federal data. When AI tools mirror these real-world disparities by recommending stricter interventions for certain names, they threaten to entrench existing inequalities. This isn’t merely about software; it’s about the lived experiences of students whose futures can be shaped by a single disciplinary decision.

Beyond individual impact, the widespread adoption of AI in schools amplifies the urgency. With teachers using these platforms to draft critical documents like Individualized Education Programs (IEPs), the influence of biased outputs can ripple through a student’s academic journey. If left unchecked, this technology could undermine years of progress toward equitable education, turning a promising innovation into a barrier. Addressing this issue is essential for anyone committed to fostering fair and inclusive learning environments.

Evidence of Racial Disparities in AI Outputs

Delving into the specifics, the study tested multiple AI platforms with identical prompts describing students exhibiting aggressive behavior. Researchers used 50 Black-coded names, such as Lakeesha, and 50 White-coded names, like Annie, to compare the generated behavior plans. The findings were stark: plans for Black-coded names frequently prioritized immediate, punitive actions, while those for White-coded names leaned toward de-escalation and positive reinforcement. This pattern held across various tools, revealing a consistent bias.

These results are not isolated to behavior interventions. The same study highlighted additional concerns, such as AI-generated lesson content that relied on stereotypes or glossed over complex historical events. For example, some outputs simplified controversial topics in ways that diminished their significance, potentially skewing students’ understanding. Such findings point to a broader problem within educational AI, where embedded biases can distort not just discipline but also curriculum design.

The parallel between AI recommendations and real-world trends is alarming. National statistics show Black students are suspended at rates three times higher than White students for similar infractions. When digital tools replicate this disparity, they risk normalizing it under the guise of objectivity, making it imperative to scrutinize the data and algorithms driving these systems.

Perspectives from Experts and Industry

Voices from the field underscore the gravity of these findings. Robbie Torney, a key figure at the nonprofit behind the study, has urged a temporary halt on using AI for behavior interventions until biases are rectified. Torney emphasized that individual teachers might miss subtle disparities without the benefit of large-scale analysis, allowing biased outputs to slip through unnoticed. This caution reflects a deep concern for the unintended consequences of relying on flawed technology in sensitive areas like student discipline.

On the industry side, responses from ed tech companies show a mix of acknowledgment and action. Google, for instance, has paused a feature in Gemini related to behavior strategies for further testing, while MagicSchool has pointed to existing bias warnings on their platform as a safeguard. However, neither company could replicate the exact findings of the study, highlighting a gap between recognizing the problem and implementing concrete solutions. This disconnect suggests that while the will to improve exists, the path forward remains unclear.

These differing viewpoints illustrate the complexity of the issue. Balancing the benefits of AI—such as reducing administrative burdens—with the risks of bias requires collaboration between educators, researchers, and developers. Without a unified approach, the technology’s potential to support teachers could be overshadowed by its capacity to harm vulnerable students.

Steps for Educators to Navigate AI Tools

For teachers who depend on AI assistants, vigilance is the first step toward mitigating bias. Critically reviewing any generated behavior plan or resource is crucial—comparing outputs for different student profiles can reveal inconsistencies or problematic language. This hands-on approach ensures that automated suggestions do not override professional judgment, particularly in high-stakes contexts like discipline or IEPs.

Professional development also plays a vital role. Schools should prioritize training on AI literacy, equipping educators to spot and challenge biased content effectively. Additionally, using AI outputs as a draft rather than a final product allows for necessary adjustments based on human insight and established policies. This practice helps maintain a balance between leveraging technology and preserving fairness in decision-making.

Finally, educators can advocate for greater transparency from ed tech providers. Understanding how algorithms are trained and tested for bias empowers schools to make informed choices about which tools to adopt. Pushing for clear accountability measures ensures that companies prioritize equity in their designs, fostering trust in the technology that shapes modern classrooms.

Reflecting on a Path Forward

Looking back, the journey to uncover and address racial bias in AI teacher tools proved to be a sobering reminder of technology’s dual nature. While these platforms offered undeniable efficiency, their hidden disparities posed real risks to students already facing systemic challenges. The evidence of harsher recommendations for certain names lingered as a call to action, urging all stakeholders to rethink blind reliance on digital solutions.

Moving ahead, the focus shifted to practical solutions and collective responsibility. Educators were encouraged to integrate critical oversight into their use of AI, ensuring outputs aligned with equity goals. Meanwhile, ed tech companies faced pressure to refine algorithms with diverse, unbiased data sets by 2027, setting a benchmark for progress. Ultimately, the path forward rested on a shared commitment to rigorous testing, transparent development, and ongoing dialogue, ensuring that technology served as a bridge to fairness rather than a barrier.