The UK Government has unveiled a groundbreaking research program dedicated to systemic AI safety, aimed at enhancing public trust as AI technologies become more widespread. This initiative, managed by the Department for Science, Innovation, and Technology (DSIT), seeks to address potential AI-related risks such as deepfakes, misinformation, and cyberattacks. By tackling these issues, the program hopes to prevent unexpected failures in sectors like finance and healthcare, which could have disastrous consequences. DSIT is partnering with the Engineering and Physical Sciences Research Council (EPSRC) and Innovate UK, a part of UK Research and Innovation (UKRI), to implement this crucial project.

Central to the government’s strategy is the enhancement of public confidence in AI systems. They consider this trust as vital for leveraging the full potential of AI technologies to boost productivity and future-proof public services. The logical step towards achieving this goal includes the introduction of targeted legislation specific to companies that are developing powerful AI models. The idea behind these laws is to ensure regulation is proportionate and avoids broad, blanket rules that could stifle innovation. Instead, the government aims to create a regulatory environment that nurtures innovation while maintaining stringent safety standards.

Systemic Safety Grants Program

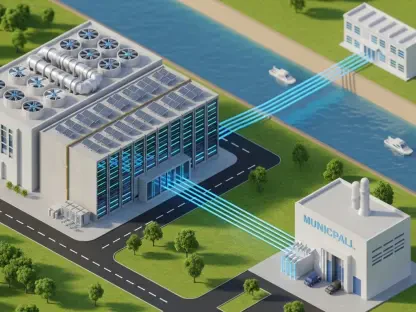

At the heart of the UK’s AI safety initiative is the Systemic Safety Grants Programme, launched by the newly established AI Safety Institute. This program is expected to fund approximately 20 projects with up to £200,000 each in its initial phase, totaling £4 million. The overall fund for the system stands at £8.5 million, indicating significant financial backing for further development phases. Researchers are invited to submit proposals by November 26, and these proposals should focus on addressing potential AI risks and critical issues, particularly in sectors like healthcare and energy. Winners of these grants will be announced by the end of January 2025, with funds set to be disbursed by February 2025.

The Systemic Safety Grants Programme aims to harness a broad spectrum of expertise from industry and academia, ensuring that AI systems are safe and reliable upon implementation. This initiative is not just about funding but also about creating a comprehensive framework for the development of secure AI technologies. By bringing together diverse minds in these fields, the program hopes to foster innovative solutions to some of the most pressing safety concerns associated with AI. This collaborative effort is pivotal for the successful rollout and adoption of AI technologies that the public can trust.

Importance of Public Trust

Peter Kyle, the Secretary of State for Science, Innovation, and Technology, has stressed the urgency of adopting AI to stimulate economic growth and improve public services. However, he emphasized that public trust is paramount for these advancements to be successful. Without public confidence, the deployment of AI technologies could face significant resistance, undermining the potential benefits these systems offer. Hence, the program’s dual focus on adoption and safety is designed to address these concerns comprehensively.

Public trust is not merely a buzzword but a crucial component of the government’s AI strategy. By ensuring that AI systems are safe, reliable, and free from significant risks, the government aims to build a solid foundation for future innovations. This focus on trust is also reflected in the targeted legislation for AI companies, which aims to create a balanced regulatory environment that fosters innovation while ensuring stringent safety standards. These measures are expected to pave the way for reliable AI innovations across diverse sectors, driving productivity and enhancing public services in the process.

Targeted Legislation and Collaborative Efforts

One of the key aspects of the government’s AI safety strategy is the introduction of targeted legislation designed specifically for companies developing powerful AI models. Unlike broad, blanket rules that could potentially stifle innovation, these targeted laws aim to create a proportionate regulatory environment. This approach ensures that while AI technologies can flourish, they do so within a framework that prioritizes safety and reliability. This balanced regulatory approach is crucial for fostering an innovative ecosystem while addressing potential safety concerns.

The collaborative efforts between the government, industry, and academia are also noteworthy. The Systemic Safety Grants Programme is a prime example of how public and private sectors can come together to tackle complex challenges. By pooling resources and expertise, the program aims to create a comprehensive safety framework for AI technologies. This collaborative approach is expected to yield innovative solutions that can address the myriad risks associated with AI, ultimately ensuring that these technologies are both safe and effective for public use.

Conclusion

The UK Government has launched an innovative research program focused on systemic AI safety, aimed at boosting public trust as AI technologies grow more prevalent. Managed by the Department for Science, Innovation, and Technology (DSIT), the initiative addresses potential AI-related threats like deepfakes, misinformation, and cyberattacks, aiming to prevent catastrophic failures in critical sectors such as finance and healthcare. DSIT collaborates with the Engineering and Physical Sciences Research Council (EPSRC) and Innovate UK, part of UK Research and Innovation (UKRI), to roll out this essential project.

A key element of the government’s plan is to build public trust in AI systems. They view this confidence as crucial for harnessing AI’s full potential to enhance productivity and secure public services for the future. Part of this strategy involves proposing targeted legislation specifically for companies developing powerful AI models. The objective of such laws is to ensure regulation is balanced and does not implement broad, restrictive rules that could hinder innovation. Instead, the government aims to foster a regulatory environment that encourages innovation while upholding rigorous safety standards.